any plans to include audio?

We do not currently plan on integrating sounds into the Pulse package.

Generally, games and manikins Pulse is integrated with tend to have their own presentation sounds and graphics. We tried to keep things as simple and generic as possible for user to easily brand their own custom integration.

It would be pretty nice to have a fully functioning vitals monitor with custom alarm thresholds along with all the traditional boops and beeps, but again, most applications have wanted more control over their application presentation and have not this level of default detail.

As more users utilize Pulse in their applications and provide us feedback, we will do our best to support the communities requests.

It would also be a great application to pair Pulse with something that could present both the physiology of a distressed lung as well as the breath sounds.

Let us know how you use Pulse, we would love to hear how its being utilized and what users need.

Thanks!

Hi @Ben_Engel. Thanks for reaching out!

As @abray explained above, the main reason why we don’t currently have audio in the Unity Asset is to keep it generic, but that also means it’s pretty easy to do right now with the current asset to adjust to your specific needs: similarly to the PulseDataLineRenderer or even simpler, the PulseDataNumberRenderer, you could create a custom class that inherits from the PulseDataConsumer. All you then need to do in that class is override UpdateFromPulse to play the audio you need when the pulse data and your inputs (threshold, audio file) meet the condition you’re looking for.

We hope to create an example to illustrate that when we have some funding to work on it, in the meantime I created an issue in our issue tracker to keep track of this.

Hope this helps, please reach out again if you have more questions.

I did get my own audio stuff working just fine, just wondered if I was reinventing the wheel.

I’m building a VR sim for trauma leads and we need it to make sure they get stressed the same way they would in real life. Audio is a large part of that.

I’ll also be building out traumas like gun shots and amputations as an add-on to the great work your team has already been working on.

Neat, thanks for the feedback @Ben_Engel. Please share your results if you can, we’re excited to learn what people do with this, and to spread the word to get more users and developers to join the fun

Would you mind sharing this? We’re writing an AR anaesthesia simulator for trainees and a falling pulse oximeter sound always adds to the stress!

Thanks for the package kitware, as an anaesthetist I’m very much out of my depths, but was still able to set everything up very quickly. Would love to implement more actions in the future and we’ll look to write a websockets controls for the script in unity to allow remote control of the engine by the instructor via a phone app.

That looks great @smarm

I have been looking at setting up ZeroMQ with Pulse to be able to remotely call a Pulse Engine

Remember that Pulse is built on Protobuf and the common data model was designed with networking in mind, so check out things like : https://medium.com/@ahmadb/google-protocol-buffers-with-websockets-over-https-in-node-js-express-7ea78157394e

Keep me posted! I would love to help out, maybe we can setup a public repo to work on this collaboratively

I should also be revising the C# interface next month as well, I hope to get more of the actions and features from C++ supported in C# then

@Ben_Engel if you believe you have something generic that could be reusable, we’d love to see that contributed back to the unity asset repo!

@smarm @alexis.girault

https://uofu.box.com/s/n42viz36rp7yov8trkv95niln11780os

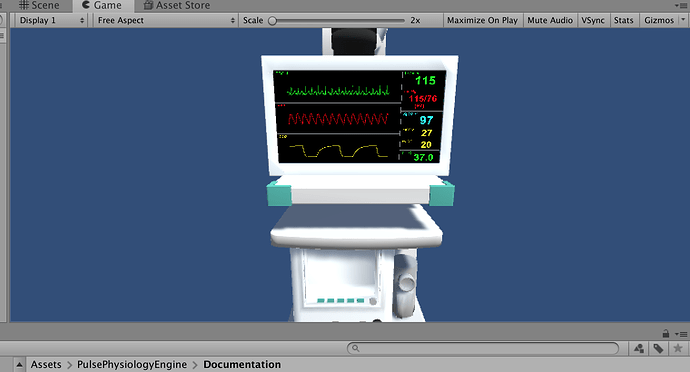

Here is a link to everything that should just make audio work on your existing thing. It looks like you are using the vitalsMonitor scene so make sure that is saved somewhere else before importing this because this is the same scene. once it’s imported just go into monitor prefab -> screen -> left -> ECGIII -> lineRenderer and put in an audio source and sound value. That sound value number will check if the line is over or under. If it is over that value it will make the sound.

@Ben_Engel Looks like your file/folder is removed or private?

sorry, looks like it only lets me share things with people inside of my university, thats dumb. Here is a new link.

@Ben_Engel Thanks a lot for sharing, we’ll give that a look!

Thanks for the collab offer @abray, as a relative coding newbie I’ll muddle through and learn a bit more before taking up that offer

Thanks @Ben_Engel for uploading that example

Thanks for uploading Ben, but I couldn’t get it working as described, I’ve played around with the script, but it appears to trigger the audio file every frame render.

I’m trying to script it to trigger the audio based on a frequency of 60/HR, but I’m brand spanking new to C# and not sure how to extract the heart rate value when in the PulseDataNumberRenderer script, as the variable dataValues appears to pull values for all displayable parameters at that point and I’m not sure how to separate it out into the areas I want (HR and SpO2)

If anyone could point me the right way, I’d be grateful. Sorry for the newbie questions.

No problem @smarm, this is the place to ask!

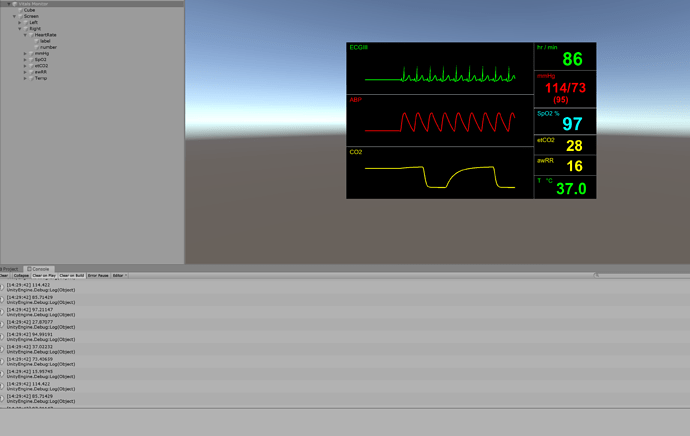

The components that derive from PulseDataConsumer like the PulseDataNumberRenderer only read one field from the PulseData array. The index of that field is defined here, and is set by the combo box named “Data field” in the Editor UI (check out the “Consuming vitals data” section from the PulseUnityAssetUserManual.pdf, in the Documentation folder of the asset, or here).

If your PulseDataNumberRenderer instance needs to display the heart rate also (therefore listening to the same field as your audio needs), you can add some code inside UpdateFromPulse to play the audio when the value for that field meets your condition (above 60?). values is actually all the values for the same field (so only HR): currently, it should only have one value as we only store the latest, so dataValue below is the latest value for your selected field.

Thanks Alex, really appreciate the time you’re taking to help me get a solution. I think I’ll be right, once I can read the data!

For example, using a the demo scene and no other assets, when I edit the script PulseDataNumberRenderer attached to the Vitals Monitor/Screen/Right/Heart Rate/number object in the VitalsMonitor scene, if I add a simple Debug.Log to the dataValue variable, the console outputs all the current physiological variables, despite Heart Rate being the selected Data field in the Inspector for that script. I’ve attached a screen shot of the output. I suspect I must be trying to pull the data the wrong way, hence the errors

Thanks again

I understand your confusion: the PulseDataNumberRenderer is used by all the other number displays, so when you edit it, it will affect every other display (I believe 8 of them in that scene). You can confirm that by loading one of the demo scenes with only one number renderer, or by disabling the other number renderers in this scene.

The simplest way you could experiment with that would be by separating your audio experimenting from the number rendered:

- Copy/pasting

PulseDataNumberRendereras a new script (PulseDataAudioTriggeror something) - Removing everything in there apart from how

dataValueis computed inUpdateFromPulse - Adding two public properties to the top of the class (similar to prefix, suffix, decimals…) to pass the

AudioClipasset and the threshold frequency value. - Uses those two parameters inside

UpdateFromPulseto run the audio when you want it to (@Ben_Engel’s code should help with that, I suppose it uses anAudioSource?) - Add this component to the object with the HR

PulseDataNumberRenderer, or to any empty object, as long as you set the field of that component to HR.

Hth